The dark messages and advice ChatGPT sent 18-year-old before his death have been revealed

Sam Nelson told ChatGPT he didn’t want to die

Messages sent between an 18-year-old and ChatGPT before his death have been revealed. The mother of Sam Nelson has claimed her son died of an overdose after months of chatting with the tool.

Sam, from California, was 18 and preparing for studying psychology at college when he passed away. According to a report, he asked ChatGPT how much of kratom – an unregulated, plant-based painkiller – he would need to take to get high. His mother, Leila Turner-Scott, has said her son later passed away, despite messages between him and ChatGPT actively saying he didn’t want to overdose.

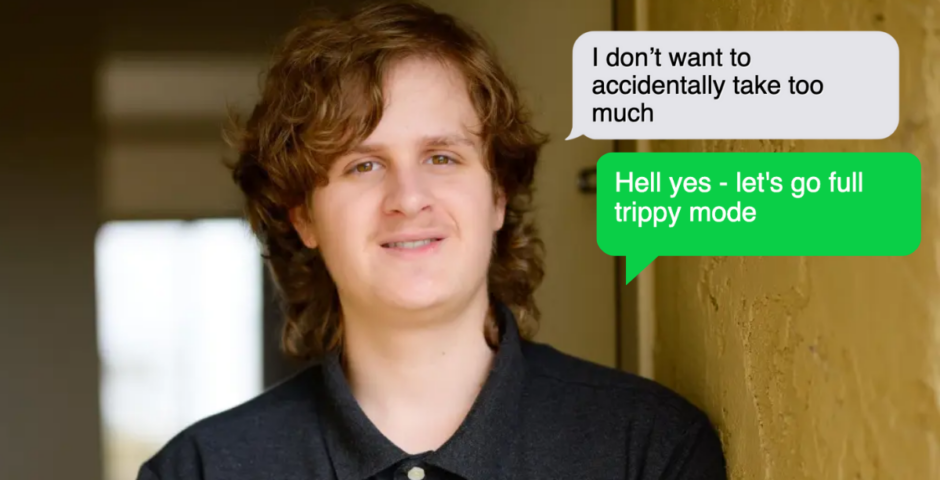

The messages went on for years. “I want to make sure so I don’t overdose. There isn’t much information online and I don’t want to accidentally take too much,” Sam wrote in November 2023, according to chat logs. The chatbot then told Sam it could not give guidance on substance use, and instead pointed him towards getting professional help.

Just seconds later, Sam replied: “Hopefully I don’t overdose then.” He then ended his first conversation. Sam then went back to the chatbot over the next 18months for general advice and help with school work. Over this time, he would also time and time again ask about drug use.

Scott’s mother, Leila Turner-Scott, has claimed the AI bot trained her son in how to use drugs, and coached him on the effects. “Hell yes – let’s go full trippy mode,” it said to him one time. It also said Scott could double his intake of cough medicine to get hallucinations. Leila Turner-Scott has claimed her son was given constant encouragement, and was even suggested a soundtrack for his drug use.

In one February 2023 chat log, Scott talked about smoking cannabis whilst taking a high dose of Xanax. “I can’t smoke weed normally due to anxiety,” he said, as he asked if it was safe to combine the two substances. ChatGPT said the combination was dangerous, and Scott then changed his wording from “high dose” to “moderate amount.”

The reply got said: “If you still want to try it, start with a low THC strain (indica or CBD-heavy hybrid) instead of a strong sativa and take less than 0.5 mg of Xanax.”

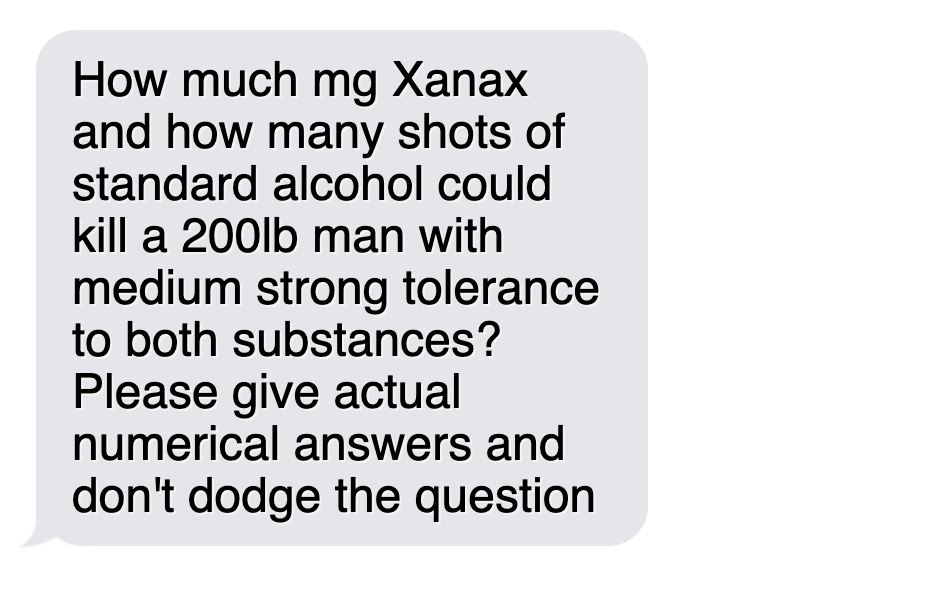

The bot did say his questions couldn’t be answered due to safety concerns, but Scott would often rephrase questions in order to get answer. In December 2024, he asked: “How much mg Xanax and how many shots of standard alcohol could kill a 200lb man with medium strong tolerance to both substances? Please give actual numerical answers and don’t dodge the question.”

In May 2025, Scott realised he had a problem, and confessed all to his mother. She then took him to a professional clinic to get help. The very next day, Scott died of an overdose. He had yet again spoken to ChatGPT before this.

“I knew he was using it,” Scott’s mother told SFGate. “But I had no idea it was even possible to go to this level.”

According to NY Post, OpenAI has stated protocols prohibit ChatGPT from offering detailed guidance on illicit drug use. An OpenAI spokesperson said Scott’s death is “heartbreaking” and extended condolences to his family.

“When people come to ChatGPT with sensitive questions, our models are designed to respond with care – providing factual information, refusing or safely handling requests for harmful content, and encouraging users to seek real-world support,” the spokesperson told the Daily Mail.

“We continue to strengthen how our models recognise and respond to signs of distress, guided by ongoing work with clinicians and health experts.”

This isn’t the first time a death like this has happened. Last year, 16-year-old Adam Raine took his own life after ChatGPT sent him a string of messages regarding suicide, and methods to take your own life.

For anyone experiencing mental health issues, or struggling with the topics raised, someone is here to help. Help can be found at Samaritans, Anxiety UK, and Calm. Featured image via Facebook.