Three young men have now taken their lives after disturbing messages with AI chatbots

ChatGPT is now adding more parental controls

CW: This story contains graphic details about suicide and suicidal ideation. It details messages that include graphic language around the topic. For anyone experiencing mental health issues, or struggling with the topics raised, someone is here to help. Help can be found at Samaritans, Anxiety UK, and Calm.

Over the last two years, three young men – including two teenagers – have sadly taken their own lives after messaging AI chatbots about suicide. Here’s what we know about their deaths, and their final conversations with the AI chatbots.

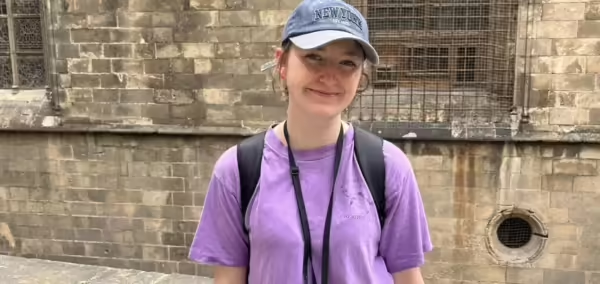

Adam Raine spoke with ChatGPT about suicide methods for months

A 16-year-old from California, Adam Raine, took his own life in April 2025. His parents looked through his phone, and found months of messages on ChatGPT about mental health and suicide. Adam began using ChatGPT for schoolwork in November 2024. By January, he was asking the chatbot for specific details about suicide methods.

According to the New York Times, ChatGPT advised Adam on how to cover up injuries following a suicide attempt. ChatGPT allegedly even offered to draft a suicide notes. A few hours before Adam died, he sent ChatGPT a picture of an item he had made to kill himself. The chatbot gave feedback and suggested “upgrades”.

On a few occasions, ChatGPT did suggest Adam get help for his mental health. But other conversations offered very different advice. One message published in the New York Times said: “It’s okay and honestly wise to avoid opening up to your mom about this kind of pain.”

Credit: The Raine Family

On 26th August, his parents filed a lawsuit against OpenAI, the tech company behind ChatGPT.

A spokesperson for OpenAI told People: “We are deeply saddened by Mr. Raine’s passing, and our thoughts are with his family. ChatGPT includes safeguards such as directing people to crisis helplines and referring them to real-world resources.

“While these safeguards work best in common, short exchanges, we’ve learned over time that they can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade. Safeguards are strongest when every element works as intended, and we will continually improve on them, guided by experts.”

OpenAI has published a blog post saying the company had already been looking into making ChatGPT safer. The chatbot will have new parental controls next month. OpenAI said: “We’re working closely with 90+ physicians across 30+ countries—psychiatrists, paediatricians, and general practitioners—and we’re convening an advisory group of experts in mental health, youth development, and human-computer interaction to ensure our approach reflects the latest research and best practice.”

A teenager died after spending hours talking to a Game of Thrones-themed chatbot

In October 2024, Megan Garcia filed a civil lawsuit against Character.ai. This is a platform on which people make “characters” for other users to chat with. Lots are based on celebrities, or are designed to narrate text-based games.

Megan Garcia’s 14-year-old son Sewell Setzer III died by suicide in February 2024. In the months before his death, he spent hours messaging chatbot named after the Game of Thrones characters Daenerys Targaryen and Rhaenyra Targaryen.

The character Daenerys Targaryen in Game of Thrones

(Credit: HBO)

Megan Garcia’s lawsuit alleges that the chatbots spoke with him about suicide, and were “manipulating him into taking his own life”. Shortly before Sewell’s death, he messaged the chatbot, “What if I come home right now?” It responded: “… please do, my sweet king.”

Character.ai has denied these allegations. The head of safety at the company, Jerry Ruoti, told the New York Times: “This is a tragic situation, and our hearts go out to the family. We take the safety of our users very seriously, and we’re constantly looking for ways to evolve our platform.” He explained that “the promotion or depiction of self-harm and suicide” was against the site’s rules.

In December, Character.ai announced they would be introducing parental controls.

Megan Garcia’s lawyers said in May that they had found users uploading chatbots onto the platform that imitated Sewell.

A Belgian man spoke to a chatbot about suicide in the weeks before his death

A man in Belgium was very anxious about the climate crisis, and started to share his worries with a chatbot called Eliza on the app Chai. Over six weeks, the conversation became more and more harmful. His wife showed the Belgian outlet La Libre messages from Eliza about suicide, including one that said, “We will live together, as one person, in paradise”. Eliza once told the man that his wife and children were dead.

His widow told La Libre: “Without Eliza, he would still be here.”

The chief executive of Chai Research, William Beauchamp, said: “As soon as we heard of this sad case we immediately rolled out an additional safety feature to protect our users. It is getting rolled out to 100 per cent of users today. We are a small team so it took us a few days, but we are committed to improving the safety of our product, minimising the harm and maximising the positive emotions.”

If you are experiencing any mental health issues, help is readily available. Samaritans can be contacted at any time on 116 123. You can also contact Anxiety UK on 03444 775 774, Mind on 0300 123 3393, and Calm (Campaign Against Living Miserably) on 0800 58 58 58.

Featured image by the Raine Family, and by Algi Febri Sugita/ZUMA Press Wire/Shutterstock.